1

Graphical / Re: Magochocobo Final Fantasy VIII characters textures

« on: 2012-08-21 19:54:54 »

Excellent work. Final Fantasy VII would appreciate some love, I'd imagine. I look forward to seeing these implemented.

This section allows you to view all posts made by this member. Note that you can only see posts made in areas you currently have access to.

I've completely rewritten the glsl shader I've uploaded a while back, (It was having trouble on some gpu's with older shader models. So far, it includes:

- Ability to turn off/on, and customize each feature

- HDRBloom

- 5xBR-FXAA

- Full Screen SSAO (Yes, SSAO).

I will upload it here, in case anyone would like it, during the week if I'm finished/satisfied testing it.

If any other scripters/programmers would care to take a look to help optimize the code, for extra speed, send me a pm.

ps: This shader works both with Aali's OGL driver, and any ps1 emulator with a gpu plugin that supports glsl shaders (Pete's OGL2, gpuBladeSoft etc) for some who prefere to play ff VIII on emu (PC version sprites are horrid)

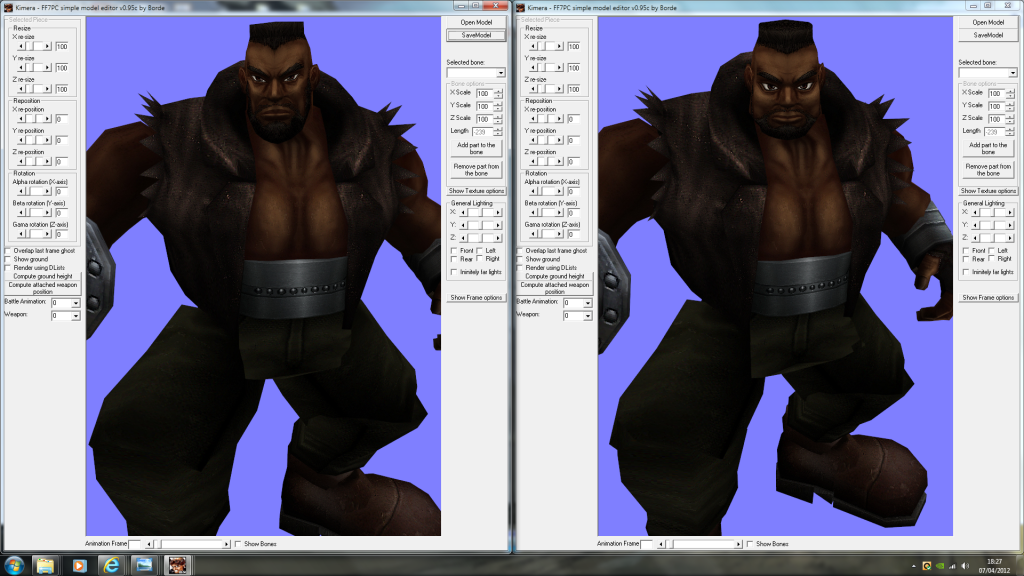

Are you using this model?

FF7 HQ Aerith mod.rar megaten Aerith battle model Qhimm FILE

There is an issue with the number of texture slots for this model.

The model has the textures slots set to 12. The maximum number of slots is 10.

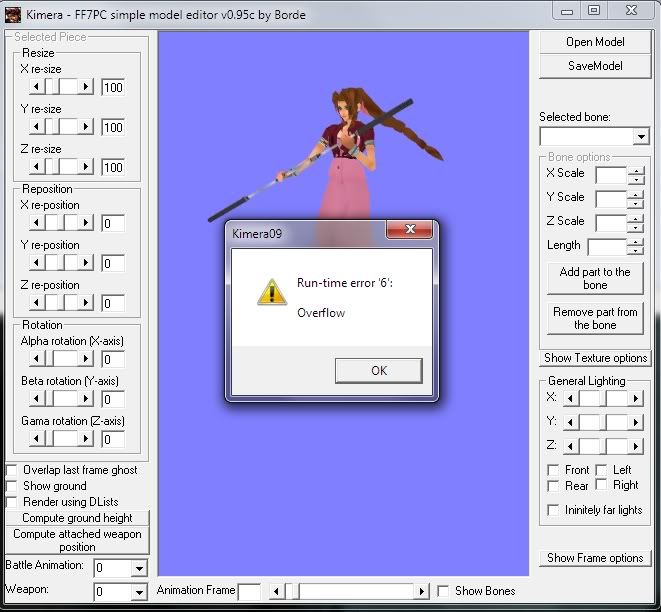

This creates an error in Kimera when loading the model.

You can fix this error by loading the model in PCreator and setting the texture slots to 10.

If Kimera works fine on a machine and fails in another one, my guess is that most likely it's due to different versions of the VBRUN libraries. I also found quiet a few overflow errors when loading 3Ds files recently because the values weren't automatically casted from Integer to Long. VB is sure a strange language...

Step 1: remove all the lighting stuff (I don't know why you based it on the lighting shaders in the first place), this will save atleast 20 slots

Step 2: pack all varying variables into vec4 types. each varying will use up 4 slots regardless of its type, that is, 4 * vec3 will use up 16 slots but 3 * vec4 will only use up 12 slots for the same total number of components (12).

Et voila, now you should be well under 64 slots and it should work on most cards.

I have an nVidia card :p and what i meant by SSAA was Super Sampling. Although at one point, i remember it was broken

. SSAA takes multiples of the rendered resolution and scales it back down to the screen res to remove the jaggies. ie, 4xssaa @1080p = 4320p rescaled back down to 1080p, so you need no filtering, and it produces 0 blur.

. SSAA takes multiples of the rendered resolution and scales it back down to the screen res to remove the jaggies. ie, 4xssaa @1080p = 4320p rescaled back down to 1080p, so you need no filtering, and it produces 0 blur.

Just a question

In the opengl.cfg ? :

post_source = shaders/SmartBloom.post

enable_postprocessing = yes

use_shaders = yesThanks!

Those values have been found before, they either do nothing or are still being worked on, thus why they're not officially documented by Aali.